Since the time I switched from web development and ecommerce as my main field of work to infrastructure development and cloud computing at Twilio, I haven’t been blogging much. This is not because I don’t have interesting topics to talk about, but rather the quantity and complexity of things I’m dealing with is overwhelming and takes most of my time.

I recently gave and internal talk on self-adaptive systems at Twilio which was quite well received and so I decided to post my thoughts to inspire more people out there to build these kinds of systems and, more importantly, frameworks for building them.

Adaptation in nature

Some of the greatest examples of adaptation come from nature. Systems like human body, colonies of ants and termites, flocks of birds, etc. are far more complex than any adaptive systems we have ever built to date. These systems are also decentralized and consist of a number of lower level primitive components, collaboration of which on a higher level produces very complex and intelligent behaviors.

Adaptation in nature is typically built around negative and positive feedback loops, where the former are designed to stop behavior, while the latter are there to re-encourage it. Ants, for example, when find food, leave a pheromone trail on their way back, letting other ants find their way to source of food. The more ants got food when following the scent, the stronger the path smells, attracting more ants to it. Similarly, when encountering a source of danger, another pheromone is produced to warn other ants of the danger ahead.

These systems are also distributed and unbelievably fault tolerant. When we catch a cold, our immune system redirects some of our body’s resources towards fighting the virus, while we can still remain operational for the most part. Certain types of sea-stars can even recover from being cut in half, yet software cannot recover from serious data loss and hardware won’t work when broken in halves.

Real world application at Twilio

This post is highly practical and is in fact a result of application of described model in a real world system at Twilio.

At Twilio I am in charge of an internal tool called BoxConfig. BoxConfig is an HTTP api for provisioning of cloud instances with a bunch of additional functionality, like keeping track of machine’s status, making sure it is monitored by nagios and gets traffic from internal load balancers depending on machine’s purpose.

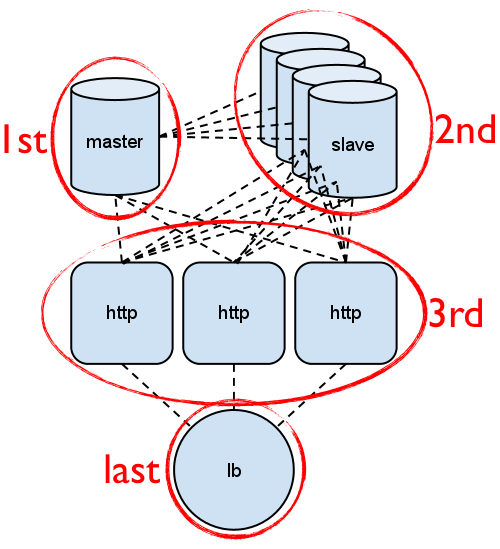

Despite working with individual machine instances through a programmable API (and a nice HTML5 application that I built) being great, we needed a way to work with and manage sets of hosts with ease. We wanted to be able to define, what we call, a host group, consisting of a number of different host types and meaningful relationships between them. Such relationships would then let us determine the order we need to boot these hosts in and how to manage other aspects of their lifecycle.

Solution

While building a distributed asynchronous task queue with workflow primitives like task set and sequence seemed like a great solution for this problem at first, it was quickly discovered that computation of steps in advance is useless in case one of the hosts in a group gets shut down during boot, or in case a long running task gets killed. We needed a mechanism that would be able to periodically check state of a group and determine what to do. This is how my research in the field of adaptive systems and adaptation rules began. As a result of that research, I implemented such system and I’m hoping to create a framework for building these kinds of external or internal adaptation loops to make both new and existing systems out there capable of adaptation.

Rules engine and ECA

An important part of any adaptation is specifying adaptation rules. While in most biological systems all of those rules are written in cell’s DNA, for a software system I needed to find a good framework for defining those. I decided to stick with ECA (even condition action) rule structure, most known for being used in defining database triggers. The idea is that each rule consists of an action to be triggered on a certain event should an accompanying condition be satisfied.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Above is a Ruby DSL I used for defining these rules. This DSL is capable of defining two types of rules - event rules, that can be explicitly triggered by an external event, and periodic checks, that are checked at each cycle of main control loop. What you see above are periodic rules, and an event rule would look like:

1 2 3 | |

I also added some boolean logic primitives for combining and negating conditions to save me from explicitly writing all the possible condition combinations by reusing what I already have.

Each action and condition in my implementation is represented by Ruby classes that look like this:

1 2 3 4 5 6 7 | |

1 2 3 4 5 6 7 | |

As you can see, each condition and action have a description that accompanies them. These are used to log every decision that control loop takes during its lifetime. Every decision is displayed to the user and contains important information such as its timestamp, action taken, reason and trigger which will either be an event name or periodic check.

MAPE-K, originally described by IBM is a great model for thinking about and building such adaptation loops. It stands for Monitor, Analyze, Plan and Execute over a shared Knowledge base. While it is up to you how each of these components are going to be implemented and wether each part of such system is going to live in its own component at all, it provides enough instructions on how to think about externalizing such control mechanisms through building sensors and effectors into your controlled system. In my case, both sensors and effectors are part of BoxConfig’s HTTP API that lets control mechanism discover current state of a group and modify it.

Conclusion

While described system is in a very early prototype phase and the end framework I come up with might be very different, progress I made so far leads me to believe that such approach can be used to solve a variety of problems developers and system administrators are facing today. Not limited to:

- Configuration management

- Process monitoring and management

- Intelligent deployment

- Cluster auto-scaling and self-healing

- Business rules enforcement and SLAs

I am currently in process of finding the best approach for implementing such a framework with a number of requirements in mind, most importantly - flexibility and ease of use.